vSphere 5.5 Enhanced LACP Support Design Considerations

Overview

Beginning with vSphere 5.1, VMware introduced LACP support on vSphere Distributed Switch (LACP v1), to form a Link Aggregation team with physical switches. LACP is a standard method (IEEE 802.3ad) to control the bundling of several physical network links together to form a logical channel for increased bandwidth and redundancy purposes.

Beginning with vSphere 5.1, VMware introduced LACP support on vSphere Distributed Switch (LACP v1), to form a Link Aggregation team with physical switches. LACP is a standard method (IEEE 802.3ad) to control the bundling of several physical network links together to form a logical channel for increased bandwidth and redundancy purposes.

LACP enables a network device to negotiate an automatic bundling of links by sending LACP packets (LACPDUs) to the peer. Compared to Static EtherChannel (sole possibility in vSphere ≤ 5.0), LACP has some significant benefits like failover or avoiding misconfigured and non-matching device-to-device settings.

However, that initial version of LACP support in vSphere 5.1 had some limitations, and especially the following two:

- No benefit in regards to hashing, as the load distribution remains based on IP hash (src-dst-ip),

- vSphere supports only one LACP group (Uplink Port Group with LACP enabled) per distributed switch and only one LACP group per host.

The latest statement is the most significant: indeed, you must enable LACP on the uplink port group…wait, so LACP will be used for all traffic of the Distributed Switch? Yes! :|

This means that you cannot override load balancing configuration for a portgroup: as I would not recommend EtherChannel/LACP for VMkernels (except for NFS, and only with a good design), this impact would imply to separate traffic types in different Distributed Switches, which may not be possible in the context (due to constraint or requirements), or undesired for manageability.

But this was for LACP v1. Enhanced LACP Support (LACP v2) on VMware vSphere 5.5 (released in september 2013) introduces a larger set of features to improve dynamic link aggregation flexibility compared with vSphere 5.1 and address the gaps with competitors.

But this was for LACP v1. Enhanced LACP Support (LACP v2) on VMware vSphere 5.5 (released in september 2013) introduces a larger set of features to improve dynamic link aggregation flexibility compared with vSphere 5.1 and address the gaps with competitors.

The biggest difference into the fact that multiple LAGs can now be created on a single Distributed Switch. In vSphere 5.1, only one LAG can be created per vSphere Distributed Switch. In vSphere 5.5, up to 64 LAGs can be created per vSphere Distributed Switch.

Furthermore, another enhanced LACP feature in vSphere 5.5 is the support for all LACP load balancing types (20 different hash methods).

Enhanced LACP Support configuration

As you must have guessed, you must use the vSphere Web Client to configure Enhanced LACP. :)

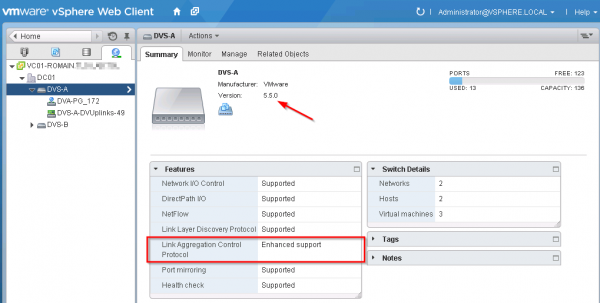

First, take a look at your VDS: Enhanced LACP Support requires Distributed Switch in version 5.5.0.

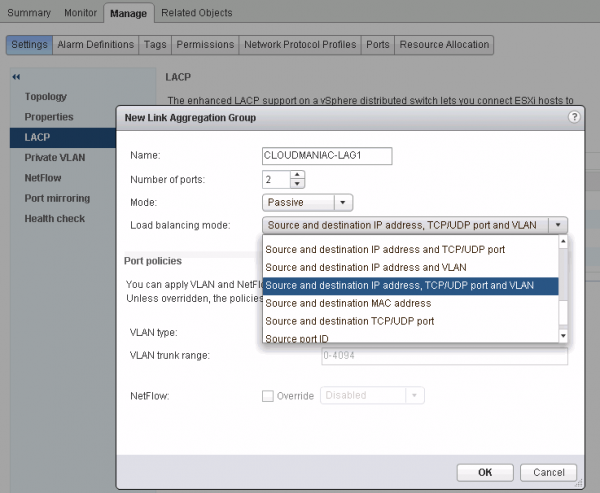

The next step is to create a new Link Aggregation Group in “Manage > Settings > LACP > New Link Aggregation Group” (via the green cross). You need to define at least a name, the number of LAG ports (24 max.), the LACP mode and the load balancing mechanism. You can create up to 64 LAGs per VDS.

Per default, DVS is acting in Passive Mode with Normal Interval (30s). The differences between the two modes are:

-

Active – The port is in an active negotiating state, in which the port initiates negotiations with remote ports by sending LACP packets.

-

Passive – The port is in a passive negotiating state, in which the port responds to LACP packets it receives but does not initiate LACP negotiation.

Concerning the load balancing mechanism, vSphere 5.5 supports these types:

- Destination IP address

- Destination IP address and TCP/UDP port

- Destination IP address and VLAN

- Destination IP address, TCP/UDP port and VLAN

- Destination MAC address

- Destination TCP/UDP port

- Source IP address

- Source IP address and TCP/UDP port

- Source IP address and VLAN

- Source IP address, TCP/UDP port and VLAN

- Source MAC address

- Source TCP/UDP port

- Source and destination IP address

- Source and destination IP address and TCP/UDP port

- Source and destination IP address and VLAN

- Source and destination IP address, TCP/UDP port and VLAN

- Source and destination MAC address

- Source and destination TCP/UDP port

- Source port ID

- VLAN

Note: These policies are configured for a LAG.

Depending the context, you may now migrate network traffic to this new LAG, or add host to the Distributed Switch. I will not cover the details of the migration to LACP in this post, but one of the required step is to change your existing distributed portgroups configuration (or create new ones) to move the newly created LAG group to “Active Uplinks”.

The LAG is represented as an uplink like any other physical NIC and can be selected in the failover order configuration. Additionally, you can notice the warning on the load balancing drop-down menu: the load balancing policy is rendered useless by the LAG.

If you try to keep standalone uplink(s) in Active or Standby, the system will warn you and prevent you from continuing: indeed, mixing LAGs and standalone uplinks in a portgroup is not supported.

To migrate existing host already attached to the Distributed Switch, select your VDS and navigate to “Actions > Add and Manage Hosts”:

-

choose “Manage host networking” and select the host(s) that you wish to edit,

-

choose “Manage physical adapters” in the next screen,

-

for each desired vmnic, use the “Assign uplink” button the assign the physical uplink to the LAG ports.

Note: you can notice that LAG ports were automatically suffixed (-0 and -1).

Finally, if you go to “Your VDS > Manage > Settings > Topology”, you will have a nice graphical representation of the result.

Enhanced LACP Support Trade-offs & Limitations

Even if LACP implementation evolved in the correct direction with vSphere 5.5, there are still some limitations for its usage:

- LACP support is not compatible with software iSCSI multipathing. iSCSI multipathing should not be used with normal static etherchannels or with LACP bonds.

- LACP teaming does not support beacon probing network failure detection.

- LACP configuration settings are (still) not present in Host Profiles.

- LACP support between two nested ESXi hosts is not possible (virtualized ESXi hosts).

- LACP cannot be used in conjunction with the ESXi dump collector. For this feature to work, the vmkernel port used for management purposes must be on a vSphere Standard Switch.

- Port Mirroring cannot be used in conjunction with LACP to mirror LACPDU packets used for negotiation and control.

- The teaming health check does not work for LAG ports as the LACP protocol itself is capable of ensuring the health of the individual LAG ports. However, VLAN and MTU health check can still check LAG ports.

- The enhanced LACP support is limited to a single LAG to handle the traffic per distributed port (dvPortGroup) or port group.

- You can create up to 64 LAGs on a distributed switch. A host can support up to 32 LAGs. However, the number of LAGs that you can actually use depends on the capabilities of the underlying physical environment and the topology of the virtual network. For example, if the physical switch supports up to four ports in an LACP port channel, you can connect up to four physical NICs per host to a LAG.

Source: Limitations of LACP in VMware vSphere 5.5.

Design Considerations

Although this feature is not the answer for all your network problems, the Enhanced LACP Support is a huge step forward in vSphere 5.5. For example, you could have in the same VDS the Management and vMotion VMkernels in a double Active/Standby configuration, and a LAG group of 4 ports to serve Virtual Machines traffic.

In vSphere 5.1, you would have either to divide this into two VDS, or use LACP for all network traffic types.

Design considerations for the usage of Enhanced LACP Support in vSphere 5.5:

-

Understand first the limitations / constraints / requirements of this feature,

-

Don’t use LAGs for loop prevention: you can’t create a loop with vSphere hosts,

-

Remember that the LAG load balancing policies always override any individual Distributed Port Group load balancing policies if it uses the LAG,

-

I do not recommend EtherChannel/LACP for VMkernels. The only use case would be for NFS, and only with a good design,

-

Prefer the usage of a Multi-Chassis LAG (MLAG or MC-LAG), whether with Cisco technologies (VSS or VPC), or with stacking solutions,

-

In situations where you have more east-west traffic (between servers), LACP combined with MLAG is more efficient than any other load balancing mechanisms: for example, LACP with vPC on Cisco switches guarantees that VMs running on different hosts will always communicate through one switch. For more information and nice diagrams, please refer to Chris Wahl post, Revenge of the LAG: Networks in Paradise,

-

Don’t forget to take a backup of your Distributed Switch before doing any structural change in the configuration,

-

(Unfortunately) LACP is not included in Standard vSwitch and requires Distributed Switch, so you need Enterprise Plus or VSAN licenses (indeed, the Distributed Switch feature is included in VSAN license),

-

LACP goes against the K.I.S.S. principle, as you have configuration to be done on the physical switch side and more constraints for your design. However, the configuration in the switches can be done on a port-channel basis rather than on each port,

-

LACP may not be more performant as the Route based on physical NIC Load (a.k.a. Load-based Teaming, or LBT) load balancing option which is less complex to implement (no configuration needed on host-facing ports),

-

If you expect to have network contention, you might want to use Network I/O Control (and create some User-defined Network Resource Pools),

-

Except for Cisco UCS (and only in some cases), think twice before targeting a LACP implementation in a blade environment,

-

Don’t forget that LACP requires manual configuration on the physical network switch(s),

-

Coordinate you with the network team to understand your need and the target configuration. If you don’t have network SMEs, consult your switch vendor for recommendations, or use LBT and forget about LACP,

-

Hosts with a mix of 1GbE and 10GbE may benefit of Enhanced LACP Support by using multiple port-channels,

-

Remember to choose the option that will fit your context, not the one that is the most attractive on the paper (or because the nephew of your neighbor told you to do so),

-

Each time you will try to use LACP for iSCSI traffic, I will sacrifice a puppy,

-

If LACP is really desired, you can also look at Cisco Nexus 1000V, but that’s another story. :)